The ultimate guide to using surveys for content marketing

How to get compelling data for content marketing with original research

Eric Van Susteren

Content Strategist

Learn how to use surveys to conduct your own original research to support your content marketing efforts, creating content that can help you get media attention, leads for your business, or organic traffic to your site.

Table of contents

────

01 Overview

Everything you need to know before getting started

74% of readers find content that contains data to be more trustworthy than content without data. (Source: A SurveyMonkey Audience survey of 1,054 U.S. adults aged 18-65.)

For writers, practically nothing is as powerful as good data.

A simple statistic can do wonders: It can lend credibility to your writing, strengthen your arguments, and succinctly make a point in a way that nothing else can. There’s no more perfect remedy for times when you’ve got a great idea to write about but nothing to support it.

Strong statistics can be the backbone of any well-researched article—whether you’re a student, a marketer, or a journalist.

Even legendary American author Mark Twain believed in their power, though maybe not their capacity for good.

There are three types of lies: Lies, damned lies, and statistics.

It’s true that not all statistics are trustworthy. In fact, it can be downright tricky to decide which sources you can trust and which you can’t. Is it peer-reviewed? Does it come from a representative sample size? Who’s funding the study? These aren’t always easy questions to answer.

Here’s a different strategy: Make your own statistics. We’re not talking about fabricating data, here—anyone can just make something up. We’re talking about conducting primary research: capturing, synthesizing, and reporting on people’s voices and opinions in a way that’s fair and responsible.

How do you do that? Why, with surveys, of course.

A well-designed survey that’s sent to a representative sample of the population, with results that are reported upon fairly is the best way to get the perfect data for any article, blog, or report.

That might sound difficult, and we’ll be honest: If you don’t know what you’re doing, it can be. But with the right know-how, anyone can use surveys to get statistics and data that support any type of content. But don’t take our word for it; ask these companies:

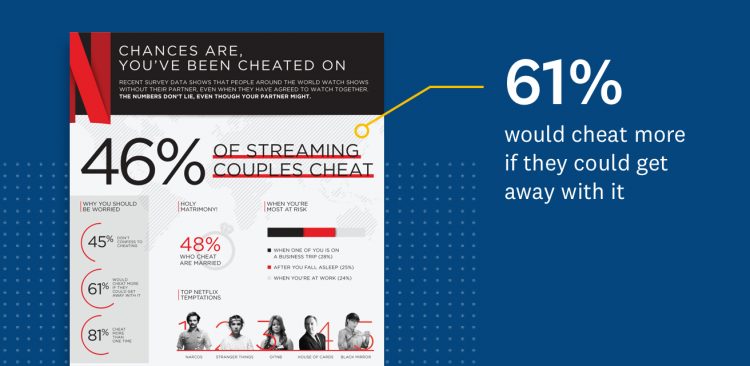

Netflix twice captured headlines everywhere by surveying Americans about their habits—both positive and negative—when it comes to streaming content with loved ones. First, by measuring how many people “Netflix cheat” on significant others by watching their favorite shows when they’re not around, then again by measuring how many people watch streaming shows with their pets.

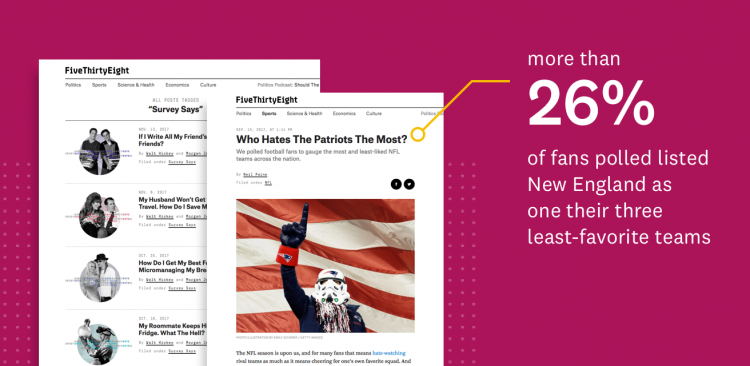

FiveThirtyEight’s celebrated pollsters are famous for interpreting other organizations’ data, but they also made their own survey to get to ask a truly tough question: Who hates the New England Patriots the most?

Time Magazine used a survey to articulate the voices of women who feel squeezed by the emotional weight and pressure society places on new mothers.

Wrike’s merry band of workflow optimization pros looked at how our personal habits affect how we get work done, making the content they created from their surveys a core part of their marketing strategy. They started with a headline-grabbing study on cursing in the workplace, then made a lead-generating report on whether people truly unplug on vacation any more.

Anyone can get this type of data for themselves, from the most decorated journalistic institutions to tech startups. In this guide, we’ll explain every step of how to create, send, and analyze surveys that deliver high-quality data—and what to do with it once you get it.

By the time you’re done reading, you’ll be able to get data on any subject you want to create your own interesting articles, valuable assets, or compelling pitches to media.

02 Project planning

Simple rules to guide your survey planning

Planning

Planning is easily the most-ignored step of the survey creation process, which is a real shame. If there’s one surefire way to make a bad content marketing survey, it’s by failing to plan.

Take the time to choose the perfect topic, develop a well-defined thesis, and target a goal that’s actually valuable for your company. When you do, everything else will fall into place. Luckily, it’s a relatively simple process with a few important rules.

Rule No. 1: Surveys are for tracking perceptions, not finding exact truth

It doesn’t matter how big your sample size is or how carefully you’ve designed your survey—a survey isn’t necessarily going to tell you the truth. Even the biggest national political polls get it wrong sometimes. Why?

- People might say they feel one way, but privately believe something else.

- Just because someone says they have an opinion doesn’t mean they are going to act on it.

- Sometimes, results can be so close that predicting outcomes can go either way.

- People might claim to have an opinion for the sake of finishing the survey, but not actually feel strongly about it.

Keep this lesson in mind when you’re designing your survey: People are unpredictable. Surveys are great for measuring sentiments, opinions, and habits but unless you’re a professional, extrapolating your results to indicate people’s exact actions is risky. Instead, think about questions that get at general attitudes, aren’t emotionally loaded, and give people space to say what’s on their mind. Be ready to apply some healthy skepticism when you get your results.

Rule No. 2: Choose a topic that’s relevant to your brand

Newspapers and magazines have the luxury of writing about lots of different subjects. But even they know where their purview starts and ends. The Economist isn’t likely to write about the Kardashians, unless it’s about their business acumen. ESPN isn’t likely to write about motherhood, unless it’s related to sports.

It’s important to consider which issues are relevant to your brand when you’re choosing which topic your content marketing survey will focus on, so that people understand why you have authority to write about it. Take Netflix as an example.

Their surveys check off all the boxes you should consider. They chose to ask about:

- A subject that applies to a ton of people (think about how many people stream TV)

- An area that’s deeply relevant to what they do as a company (watching TV)

- Most importantly, they knew readers would relate to the concept of “Netflix cheating”

As a result, dozens of media outlets picked up Netflix’s story because everyone knows what it feels like to be tempted—or be betrayed—by the subtle siren of a cliffhanger in a Netflix special.

Netflix showed people how similar they are to each other (spoiler alert: We’re all monsters), and in the process, showcased how popular their product is (in case there was any doubt). The result was way more valuable than a little extra brand awareness.

Without saying it outright, they showed why Netflix is so popular to begin with: They make content that’s so good that you’d betray your spouse over it.

When Wrike used a survey to write about the death of summer vacation, they also gave a subtle nod to the importance of what they offer as a company: efficiency and productivity.

Here’s the implication: If more people are still working even though they’ve taken time off, maybe they need help with productivity while they’re at work. That way, they wouldn’t have to worry about it while they’re away. Hey—maybe they should buy productivity software!

A survey that isn’t relevant to your brand won’t deliver any payoff or brand association. On the other hand, it’s best not to get too literal either. People don’t like feeling marketed to, so you have to find something that they actually want to read—like, for fun.

Wrike didn’t ask people about how they use project management software; they asked about something with more mass appeal that still relates to what they do.

We can’t overstate how important this step is. Choosing the right topic is the most important part of making successful data-backed content. The topics that will ultimately be most successful for you and your brand must be relevant, interesting, and broadly applicable. If you do it right, you might end up with a story that goes viral.

Rule No. 3: Decide on your goal at the outset

You’ll be spending a significant amount of time—and possibly money—to design your survey, write content about it, try to syndicate it, and more. Make sure you’re doing it for the right reasons. It’s important to set a goal for your survey content that will actually help you.

Some common goals for survey content are:

- Generating media coverage

- Driving traffic and awareness

- Steering brand perception

- Generating business leads

- Converting prospects

- Customer engagement

Your goal will almost certainly have an effect on how you approach your content marketing project. For example, when Wrike did its first survey, it used a subject and distribution strategy that focused entirely on getting attention and awareness.

Cursing in the workplace is only tangentially related to what Wrike does as a company, but they knew that it would be a subject that many people would be interested in—and maybe even relate to. The result was over 100 media mentions including a segment on the Today Show, which isn’t just another day at the office for a project management software startup.

“Cursing was a completely different direction and a risk on our part … It was really successful in PR and media and brought us into a new light, a bit edgier, sexier, successful on that end.”

The second time Wrike did a content marketing survey project, they had a different goal in mind: generating business leads. The death of vacation may be a slightly less attention-grabbing subject, but as we’ve already mentioned, it’s deeply linked to what Wrike does.

Wrike approached syndication differently, too. Instead of giving their content away to everyone, Wrike chose to “gate” the report, requiring readers to fill out a contact form before reading. The form flags the person interested in the report as a potential lead and gives salespeople contact information to follow up with.

Whatever you goal is—attention, business needs, traffic—make sure you’re creating the assets you need to support it in parallel with your survey.

Hoping to generate business leads? Make sure you’re creating and designing a flashy infographic or report, plus a gated landing page with a marketing automation tool set up to track signups for your content. (SurveyMonkey likes to use Marketo, but there are several other high-quality options, too).

Considerations

- If you need to show some solid business impact from your survey efforts, a lead generation project is your best bet.

- Successful surveys for lead generation content are tightly focused on topics that are relevant to the industry you’re targeting for sales. Make sure you’re about to survey people that will deliver information relevant to the people you’re selling to.

- While it may be the most valuable to your business, the industry-specific, sales-targeted nature of lead generation campaigns means they’ll generate less attention than other options.

Want to get media mentions? Work with your PR team ahead of time to develop a list of media outlets that would be interested in a story like the one you’re producing. Remember, the more widely relatable your angle is—within the bounds of your brand, of course—the more media outlets it will apply to.

Considerations

- If your results get major media attention, you’ll get a much wider reach from them than you ever would with any other option.

- Journalists are very selective about what they cover. If you want them to pick up your story, it can’t be promotional of your company at all.

- Journalists won’t cover a study with a thin sample size. If you’re surveying a general population, you need at least 1,000 respondents—and maybe half that for a more niche audience.

- If you want to position yourself as a thought leader in your industry, syndicating trend data on your industry is a great place to start.

- Similarly, if you’re having issues getting the word out about your company because journalists don’t want to write about your products, they’ll be more open to writing about newsworthy, exclusive data from you.

Looking for site traffic? Target a search term that a lot of people search for and write a robust, SEO-optimized article that delivers unique, valuable information that’s related to your company or industry. Link to it from your site, write articles for other sites that will let you link to it from their site, and set up a social media plan for every relevant channel you can think of: Twitter, Linkedin, Medium, Quora—even Reddit, if it’s not too promotional.

Considerations

- This route gives you the most latitude for choosing your topic and including information about yourself.

- Your content may not spread if you don’t have a good distribution plan. If you choose this option, you have to make sure you’re very thorough about putting it in front of readers.

- Your survey can be as formal or informal as you’d like, which means it may be OK to have slightly smaller sample sizes.

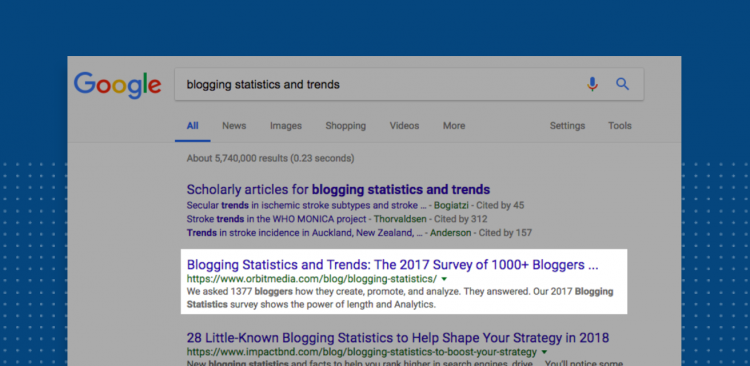

Here’s an example: Andy Crestodina, co-founder and CMO at Orbit Media Studios, has been doing a “Blogging Statistics and Trends” survey for the past 5 years running. He uses SurveyMonkey to create the survey and then sends it out to his network (and his network’s) of bloggers via social media. Andy’s cracked the code on SEO-optimized research-backed content.

His strategy:

- Find the “missing stat” that’s frequently stated but rarely supported. In this case, he knows a ton of people write about how blog posts take “a long time” to write and was able to finally quantify “a long time” by surveying bloggers.

- Coupling those data points with a content piece that optimizes for the search term “[topic] statistics” to create a magnet for backlinks. Now, when people go to write about blogging, they search for “blogging statistics” they can use, find Andy’s piece, cite it, and boom: organically generated backlinks and boosted SEO rankings.

It’s been a hit, year after year.

“Combined, the surveys have been linked to by 1600+ websites and shared 4000+ times. I feel like I’m cheating because it works so well.”

Rule No. 4: Make a plan and a timeline

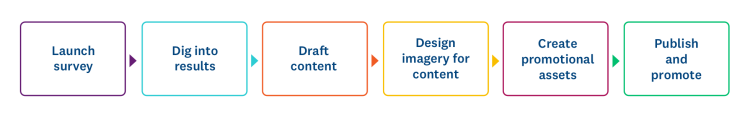

It takes a team to make a successful piece of content. Make sure all the pieces and players are aligned before you start writing a single question.

Who needs to be involved? Content writers and designers, certainly. Possibly someone with a data science background to help you interpret the data. The social media team? Check. PR? Absolutely, if you intend to publicize your content. Definitely plan on including your marketing technology folks if you want to collect and track leads to a gated asset. Before you even start your project, it’s best to keep everyone informed about your plans so there are no surprises.

What’s the timeline? Timing can be a huge component of whether a data announcement is successful or not. Don’t release your findings during a dead time like the holidays or a competitive news time (like during Dreamforce, if you’re in Enterprise sales). On the other hand, you can get a boost if you release relevant results on the same day as a small holiday or event—National Marketers Day, for instance. Maybe your content supports a larger campaign in a few months. Maybe you need to deliver some leads by the end of the quarter. Whatever the case may be, make sure you’re working backward from your deadline to give yourself enough cushion (we recommend at least a month for more involved projects) to make sure everyone can get their piece of the work done.

Centralize your plans: Once you’ve gathered all the information you need from everyone involved, write everything down in a document that anyone can reference. Make sure to note when each person can begin their piece of the project. Writing and publicizing really effective survey content is deceptively difficult, but if you plan correctly, it will all go smoothly.

03 Design

How to create a survey that will yield valuable data

So you’ve developed the perfect idea for an article that fits your brand, meets your goals, and works for other initiatives and campaigns you’re pursuing. It’s relevant, it’s interesting, you’ve even got a clicky headline in mind for it. Time to jump in and start writing questions, right?

Wait!

To write an effective survey, visualize the end result

The key to any good survey, content marketing surveys included, is focus. The best surveys address a single, clear research question, around which all the other questions are structured. Surveys that try to answer several unique questions will often deliver weak results. The surveys with no research question behind them at all are usually the weakest. These are usually the ones that wade into a topic with no initial direction at all, but instead with a general interest in “exploring” the topic with the survey.

It’s best to deeply research your survey topic before you ever start writing questions. If you’re thinking “let’s see what we can find out about this topic” when you’re writing your survey, the outcome will often be a lot of disparate, unfocused, or superficial data.

Imagine what the end result of your survey will be. You’re going to write an article exploring a thesis (your main research question) with a body that’s filled out with a few key points that support that thesis. The data from your survey questions should support those key points.

Before you write any survey questions

- Write a clear thesis that you want to explore with your survey. It’s OK if your thesis is proven wrong later (we find it often is), choose an argument or direction to focus on.

- Do some preliminary research to determine which points will best support your thesis.

- Think about the data points you’d need to support those main points.

- Write questions that will help you get those data points.

Here’s an example from SurveyMonkey’s own survey about marketers that we’ve simplified to fit this guide:

Thesis: Creating attention-grabbing marketing campaigns is a difficult, high-stakes process and many people may doubt its efficacy.

General attention

- How often would you say you click on ads online?

- Which type of marketing or advertising content is most likely to catch your attention online?

- Which type of marketing or advertising theme is most likely to catch your attention?

Marketing fails

- How likely are you to permanently stop using a product or service because their marketing or advertising was offensive to you?

- Which of the following words would you use to describe your company’s marketing team?

- Thinking about the offensive campaigns or advertising that you’ve seen or heard about, which of these is closer to your thinking, even if neither is exactly right?

Perception of marketers

- How well do you understand what your marketing team contributes to your company?

- Which of the following words would you use to describe your company’s marketing team?

- What is the one word you would use to describe your company’s marketing team?

We’re not suggesting that you should lead your audience to say what you want them to stay. Under no circumstances should you sacrifice the validity of your results to make punchier data points. Instead, we’re suggesting that you structure your questions in a way that will get you definitive answers, instead of scattered results.

Balancing objectivity and direction can be tough. If you’re new to writing surveys, it’s worth reviewing the five most common pitfalls beginners face.

Choosing the right question types

Now that you know what information you need to support your key points, it’s time to start writing the questions that will help you get that data. Not every question type is equal. Each have pros and cons that make them better for some things than others.

Likert scale questions

Likert scale questions, which ask you to rate something from one extreme to another—strongly agree to strongly disagree, for example—are the most focused type of closed-ended question.

How likely are you to click on ads online?

- Very likely

- Somewhat likely

- Neither likely nor unlikely

- Somewhat unlikely

- Very unlikely

Likert questions are your best bet for getting strong, focused answers from your respondents about a single issue. Use Likert scale questions if you want to get a simple standalone data point, like “40% of people say they sometimes click on ads online.”

Likert scale questions may be relatively dry, but they’re clear and consistent, which makes them perfect for avoiding bias. If you want to ask a controversial or important question, it’s best to use this type of question.

It’s a good idea to use at least one Likert scale question for each of the major supporting points of your article to get a firm, trustworthy answer on it.

Tip: SurveyMonkey Genius can help you choose which scale fits your question best and will automatically estimate how successful your survey will be before you send it.

Multiple choice questions

These are questions with answer options that are written by the survey creator. In other words, instead of using a predetermined structure for the answer options, the way Likert scale questions do, you get to make up your own answer options.

Which type of marketing or advertising theme is most likely to catch your attention?

- Happy

- Sad

- Funny

- Sweet or endearing

- Scary

- Informational

Since you get to write your own answer options, custom answer option questions can often provide choices that are a lot more colorful than their Likert cousins. But they also carry a few concerns.

By writing answer options for respondents, you’re forcing them to play by your rules. What if they wanted to choose an option that’s not on the list? They’ll usually choose something that doesn’t exactly match their views. In this way, you can accidentally bias your results by imposing your answer options on respondents.

You can reduce this type of bias in three ways:

- Add an “other (please specify)” answer option

- Add a “none of these” answer option

- Add answer option randomization

- Don’t require respondents to answer the question

These types of questions are still very useful and will probably make up the majority of your survey. But it’s best to use Likert scale questions to address your most important points. Once you’ve gotten a solid answer, custom answer option questions are great for “supporting questions” or questions that add color to your answers.

Single-answer questions vs. multiple-answer questions

Letting respondents choose more than one answer option per question makes a bigger difference on your data than you’d think.

Multiple-answer questions

These question types allow respondents to select multiple answer choices, and are sometimes referred to as “select all that apply” questions. Since respondents can give as many answers as they want, they tend to select several. This generally affects your data in two ways:

- Each answer option tends to be selected more often.

- Since respondents “spread out” their responses, the differences between answer options are usually much less pronounced.

This means multiple-answer questions can often present bigger standalone data points but aren’t normally the best for comparing and contrasting the options.

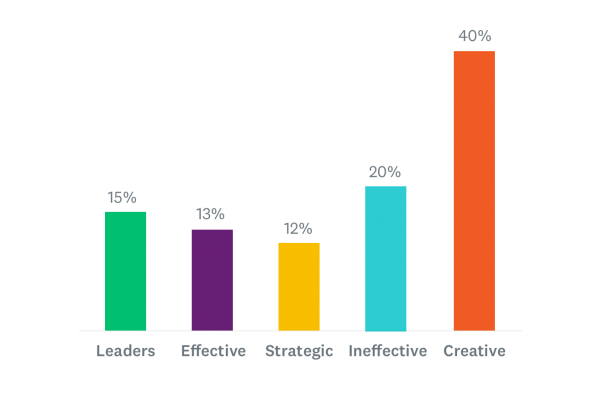

Which of the following words would you use to describe your company’s marketing team? (Select all that apply)

- Leaders

- Effective

- Strategic

- Ineffective

- Creative

- Out of touch

- Innovative

Some respondents might select all four answer options in the example above. You’ll be able to get good standalone data points like “60% of people say their company’s marketing team is creative,” but the differences between each answer option will usually be muddied by the extra noise.

Tip: The fewer the answer options, the more likely it is that respondents will select the options you want to make a statement about. Try to limit extraneous or unimportant answer options.

Single-answer questions

These question types force respondents to select a single answer option instead of multiple. Since respondents can only choose one answer option, the effect on your data will usually be the opposite of multiple-answer questions:

- Each answer option will have fewer respondents overall.

- The differences between each answer option will tend to be greater.

This makes these question types ideal for comparing and contrasting responses to different answer options but since each answer option will have lower response rates, the standalone data points will be less impressive. Take the following for example:

Which of the following words would you use to describe your company’s marketing team? (Select one)

- Leaders

- Effective

- Strategic

- Ineffective

- Creative

- Out of touch

- Innovative

With only a single answer option to choose from, our standalone data point might not be as punchy as the previous example (i.e. now only 25% of people say their company’s marketing team is creative). However, we’re now able to more accurately compare answer options, like “25% of people say their company’s marketing team is creative, while 18% say they’re ineffective.”

Open-ended questions

Open-ended questions can be really valuable because they allow respondents to answer in their own words. While closed-ended questions let respondents choose the answer option they agree with the most, open-ended questions let them say exactly what they mean. There are some downsides, though.

- It’s hard to quantify their responses into charts and graphs.

- Open-ended questions take a lot more effort for your respondents to answer.

- It can be difficult to parse the responses and use them in a meaningful way.

For these reasons, it’s often better to limit the number of open-ended questions you ask when you’re writing a content marketing survey. When you do use them, they can be pretty effective in two ways:

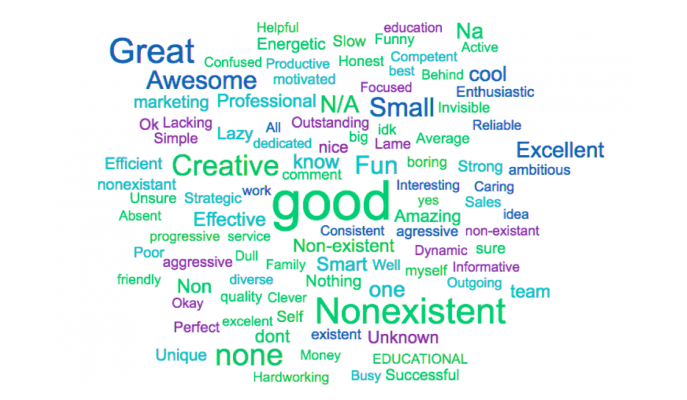

Use them in word clouds

Word clouds are great tools for distilling the essence of your respondents’ answers to your open-ended questions into a readable format. If you’re not already familiar with them, word clouds take the most commonly used words and phrases from your open-ended question responses and make a collage of words, where the most popular ones appear biggest. They usually look something like this:

If you’re only asking an open-ended question so that you can make a word cloud, we recommend you do as much work for respondents as possible, and ask for as little as possible.

For example:

What is the one word you would use to describe your company’s marketing team?

You can also ask them to answer in a complete sentence and, if you’re asking in SurveyMonkey, the software will automatically filter out words that aren’t “keywords.” But regardless of whether you decide to use a word cloud, it’s always best to make things as easy for your respondents if you can, so you end up with more responses.

Look for ‘the perfect quote’

Sometimes a respondent will say something that perfectly encapsulates your point, and articulates it better than any statistic or data point ever could. Of course, getting “the perfect quote” from your respondents won’t happen every time, but when they do, they can be a fantastic asset. Either way, you can usually find some interesting tidbits, but they won’t always be presentable, so they might serve better as background for you, rather than a polished quote.

Finally, don’t forget to consider your own effort. The best surveys will have north of 500 responses, which means you’ll have to read through a minimum of 500 responses to find a good one. Only ask for answers you’re willing to do the legwork for.

Screening questions

Sometimes you want to narrow down your respondents so you’re only hearing from one type of person (for example—millennials with cars). Most survey panels like SurveyMonkey Audience have targeting parameters that allow you to choose who gets your survey. But if you’re sampling from a random sample, or want to get more specific than your targeting parameters allow, screening questions can be a good way to weed out opinions you don’t care about.

Screening questions are usually at the beginning of your survey and they allow you to disqualify respondents who you aren’t interested in surveying.

For example, say you’re surveying a general population, but you’re really only interested in hearing from employed respondents. You can use a screening question to ask respondents about their employment status and disqualify them if they say they’re unemployed.

Skip logic questions

Instead of disqualifying respondents from your survey, maybe you just want them to answer a different set of questions. Skip logic questions allow you to “skip” respondents to a different part of your survey, either so they don’t have to answer questions that don’t apply to them or so that they can answer an entirely different set of questions.

For example, when we ran a survey asking Americans who they’d root for now that the U.S. was disqualified from the World Cup, we first asked how closely they followed soccer, and routed them to different questions depending on their answer. We wanted opinions from both experts and casual fans, but not necessarily about the same topics.

We wanted to ask experts about individual player performance, but knew casual fans might not have very informed opinions. We were still interested in hearing from both groups about who they were planning to root for, though! A skip logic question at the beginning of the survey let us do both.

Demographic questions

Demographic questions ask your respondents for information about themselves that may not be directly related to the subject of your survey. They normally come in a group at the end of your survey and are useful for seeing how different demographics answer questions in your survey differently. Want to know if men are more likely to Netflix cheat than women? Ask a demographic question about gender and filter by question and answer to see how each group answered.

Match question types to your survey

Now that we’ve assigned question types to each of our questions, our survey is really starting to come together.

- Each of our major supporting points is supported by a direct Likert scale question.

- We make good use of custom answer questions to add more context and color to the issues we’ve addressed with our Likert scale questions.

- Each supporting point is thoroughly explored using a mix of question types and angles.

- We use one final open-ended question that addresses our thesis.

Thesis: Creating attention-grabbing marketing campaigns is a difficult, high-stakes process and many people may doubt its efficacy.

General attention

- How often would you say you click on ads online?

- Which type of marketing or advertising content is most likely to catch your attention online?

- Which type of marketing or advertising theme is most likely to catch your attention?

Marketing fails

- How likely are you to permanently stop using a product or service because their marketing or advertising was offensive to you?

- Which of the following words would you use to describe your company’s marketing team?

- Thinking about the offensive campaigns or advertising that you’ve seen or heard about, do you feel offensive advertising campaigns represent a company’s values?

Perception of marketers

- How well do you understand what your marketing team contributes to your company?

- Which of the following words would you use to describe your company’s marketing team?

- What is the one word you would use to describe your company’s marketing team?

Creating an intuitive survey

By now you should have an idea of how you’ll format the questions in your survey and what they’ll ask. Now you can design your survey so that it’s intuitive and easy to take—it might even be fun! The good new is, if you’ve followed all the steps to this guide up to this point, a lot of these tips will already be covered.

Vary question types

Don’t let your respondents slip into autopilot. Likert questions are great, but if you give respondents 10 questions in a row that are all identical except for one or two different words, then they’re going to stop paying attention. Keep your respondents engaged. Stimulating surveys have varied question types about different subjects and ask respondents for information in different ways by changing up question wording.

Group questions by theme

Surveys are a great way to explore a theme or argument and there’s often a lot of ground to cover. Priming your respondents to answer is important for making them consider the questions in your survey correctly. Your survey should have a logical pattern that’s easy for respondents to follow. Some questions won’t make as much sense to respondents without the context of the questions before them. If a question seems like a total non-sequitur, then respondents may not consider the overall theme as closely.

Move from general to specific

For each theme in your survey, it’s best to start with the most general questions and then move into more specific questions. In a similar manner to grouping questions by theme, it’s important to give respondents context for the types of question they’re answering, especially if they’re very specific or personal. Starting each theme with a broad question about their feelings on the issue (Likert questions are great for this) is a great cue for respondents that this area of your survey will focus on a particular theme.

Use multiple pages (but not too many)

Just like chapters in a book, pages in your survey are great way to cleanse survey respondents’ palate and get them to start considering a new theme or idea. Using multiple pages in your survey, usually between 3 and 6, is also a good way of keeping respondents engaged. Don’t use too many, though. It can be annoying to click through multiple pages, especially for respondents using mobile devices.

Bonus: No matter what you do, some of your respondents are going to lose interest in your survey and drop out. If you make your survey in SurveyMonkey, each time a respondent changes a page, their responses on the previous pages are saved. That way, even if they quit, you still get to keep some of their responses.

Here’s an example of what our productivity survey might look like with the appropriate number of pages and thematically grouped questions.

Survey length and question difficulty

Asking your respondents too many questions will cause them to lose interest and drop out of your survey or worse, continue without paying attention. That being said, some question types are less taxing than others for your respondents.

No matter which question types you use in your survey, we recommend you keep it under 25 questions—hopefully fewer.

Likert questions: Least taxing. Likert questions follow a predictable pattern that most people are familiar with, even if they don’t take a lot of surveys. They allow respondents to reply based purely on sentiment toward the prompt, rather than carefully considering which answer option is best. To get scientific about it, it’s much less difficult to choose how much you agree with a statement or how strongly you feel about an idea than it is to weigh the importance of different concepts in your mind.

Custom answer option questions (single answer): Moderately taxing. Even though custom answer option questions are closed-ended, they’re more taxing because respondents have to carefully read through each answer option to determine which one fits their opinion best. But this really depends on the question. If you asked respondents to choose their favorite ice cream flavor, it might be easy for some people. Others might have a really hard time choosing between mint chip and chocolate chip cookie dough, thus making it more taxing to answer.

Note: Demographic, screening and skip logic questions normally fall into this category.

Custom answer option questions (multiple answer): Moderately taxing. One benefit of them is that respondents don’t need to weigh which answer option is best because they can just select all that apply to them. However, the more interactions (clicks or taps) respondents have with your survey, the more time and effort it takes to complete.

Open-ended questions: Very taxing. Open-ended questions force your respondents to come up with their own answers and type them in. Remember that more than 30% of respondents are on mobile devices, so they’ll be typing in their responses on a tiny screen! It’s best not to include any more than two open-ended questions to your survey.

Our workplace productivity survey comes in at 17 questions, including the screening question. The demographic questions we’ve included come free with SurveyMonkey Audience, but we could add more if we wanted to.

04 Sending

Deciding how to send your survey

Who you send your survey to and the types of questions you ask have everything to do with the goal you set off with at the outset of your project.

As we’ve already mentioned, some types of content marketing projects might be less “serious” and therefore won’t require quite as much rigor. But if you’re going to create a piece of content that you’re intending to “go viral,” get picked up by PR or be used as a resource to people in your industry, it’s best to make sure that your survey is as sound as possible.

Who you send your survey to and the ways you send it can have a pretty big impact on your final result. Let’s take a look at three of the most popular ways for sending a content marketing survey:

Poll people on social media

Well-designed online quizzes and surveys can be a pleasant diversion, and people on social media love diversions.

Fun social media surveys about subjects that are relevant to your brand or customers (see the planning section) are customer touchpoints in themselves. And when you invite followers to be a part of your study, they get invested. They’ll want to know how their answers stack up to others, which will naturally make them interested in the content you create from their responses.

While they’re a potent way to engage with your followers, sending surveys via social media is generally considered the least scientifically rigorous method for sending. That’s why they’re usually best used for light-hearted topics that are relevant to your followers. Let’s dive into some of the benefits and drawbacks of this sampling method.

Benefits

- Ease. Quick, easy to send, and free

- Double-dipping engagement. Social followers like fun, relevant surveys AND the content they create.

Drawbacks

- Not scientific. Your Twitter followers are not a representative sample of a larger population.

- Low response rates. If you don’t have many followers you might have a tough time getting enough respondents.

- Closed circuit. A survey of social media followers probably won’t be interesting to anyone but your social media followers. Pickups from PR and journalists or partnerships are likely out of the questions

Email your contacts or customer base

If your company has been around for a while, you’ve likely got a treasure trove of customers who can make wonderful survey respondents for the right type of project. Surveying customers can be a delicate act, though. These are the people paying your bills, so it’s best not to annoy them with errant surveys on random topics.

Instead, home in on your product or brand. If you sell email marketing software, ask marketers about how they send emails. If you work in interior design, ask contractors about their business outlook.

“State of the industry” stories that ask people in a particular industry (read: your customers) about something they’re experts in are great for business.

They’re often legitimately valuable to people in your industry, which positions you as a thought leader and can help you build new business—especially when you “gate” the asset to collect sales leads.

And since your customers know your products better than anyone, surveying them can also be a great source for sales content, like testimonials, case studies, and stats on ROI or key benefits.

Benefits

- Business. Surveys sent to your customers are often the best option for valuable “business insights” content.

- Relevancy. Data based on your customers’ jobs will be intrinsically interesting to them—and prospective customers.

- Ease. They’re free and easy to send if your customer email lists are in order.

Drawbacks

- Not scientific. Again, your customer base isn’t representative of the population at large, but it’s OK to ask them about their jobs.

- Annoyance. Your customers are your company’s lifeblood, so it’s best not to bug them with too many surveys or surveys that aren’t relevant.

- Narrow appeal. In most cases, the content from surveys to customers isn’t going to have appeal outside your industry (but it’s still valuable appeal).

Use a global survey panel

By far the most versatile and scalable way to send surveys is with a survey panel. If you want to send a survey using a survey panel, it’s extremely important that it’s trustworthy. A good survey panel gives you access to qualified survey respondents around the world using a responsible incentive model that rewards respondents for thoughtful answers.

Find a targeted group of people to take your survey

When surveying your customers or followers isn’t right for your survey topic, use SurveyMonkey Audience to find the people you need.

Since they allow you to easily reach a general audience, survey panels are much more flexible than the other choices already mentioned. You can ask anyone anything you want (but please, still refer to the planning section to find a topic that makes sense). While it’s easy to use them to reach a general population, most survey panels will have targeting criteria that can narrow the pool of respondents you send to.

Benefits

- Scientifically valid. With a reputable panel and a high enough sample size, you can get truly trustworthy data on any subject.

- Targeting. You can send a survey to a general audience or a targeted one, which opens up a lot of possibilities for your study.

- Press worthiness. An interesting study that’s based on trustworthy data is a great target for media attention and internet virality.

- Accessible. You don’t need a big social media following or a lengthy email list to send a survey.

- Speed. You can collect 1,000 responses in a couple days versus the weeks it might take to drum up responses organically.

Drawbacks

- Budget. Sending a survey via a market research panel will cost money, but these days you don’t have to spend tens of thousands of dollars with a full-service agency to target the people you need. There are DIY options like SurveyMonkey Audience that are much more affordable.

- Feasibility. Targeting options vary but job-role level targeting like we mentioned in the Surveying your contacts or customer base section can be challenging.

Sample size

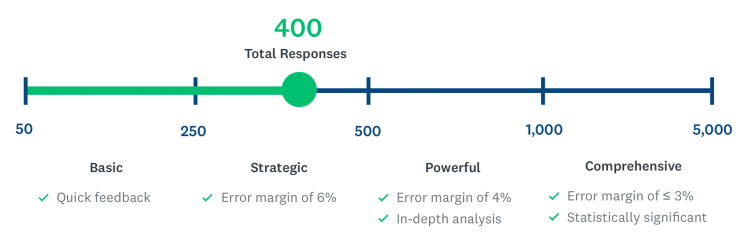

Once you’ve decided how you’ll send your content marketing survey, the next step is to decide how many people you’ll send it to. This step is extremely important. Your sample size affects your credibility, the validity of your results, and your options for distributing the content you create based on your findings. There are four main issues to consider when you’re trying to determine your sample size, and you’ll want to make sure you check off each one before you continue.

Distribution channel: We’ve already touched on this topic in the section above, but your distribution channel can play a major part in the sample size you aim for.

- Running a quick, fun survey on social media? 200 responses will probably do.

- Trying to get some industry insights using a panel or an email list? As long as you’re not making wild assertions and staying within your niche, 500 is a respectable place to start.

- Using a panel to survey a general population with the hope that the press will pick up your results? 1,000 responses is generally the magic number.

66% of people say 1,000 or more respondents is the lowest sample size they’d trust in content that cites survey research and data.

These are just rough, minimum requirements. In each of these cases, the more responses you get, the better—particularly in the third case. Netflix had 30,267 responses for its Netflix cheating study, which lends a ton of credence to its results for journalists and their readers.

Margin of error

One of the biggest benefits on a large sample size is the effect on your margin of error. A margin of error tell you how much your results might vary from the actual population. In essence, it tells you how confident you can be that your results are accurate. For example, if 50% of people say they like ice cream and you have a 5% margin of error, then the true percentage of people who like ice cream is somewhere between 45% and 55%.

You can learn more about how your desired margin of error affects the sample size you need; we’ve even got a calculator that will do it for you.

The main thing to remember is that the lower the margin of error, the better. Journalists will demand a slim margin of error before writing about a study—particularly if it’s about a serious or newsy issue. That’s why political pollsters send surveys to thousands and thousands of people. As mentioned before, if your subject matter is a little lighter, then you can relax your sample size standards slightly. Here’s a rough view of how sample size and margin of error relate in a general population.

Feasibility

When you’re using a panel to gather survey responses, the panel picks respondents from an inventory of available respondents. Some are more in demand than others. That means that the more niche a population you target with your panel, the more difficult it’s going to be to find respondents. For example, if you want to target mothers, like Time did, or people who stream TV on Netflix, it probably won’t be too difficult to find enough respondents. But if you want to survey mid-level managers in marketing who use your software, you’re going to have trouble finding enough respondents.

Budget considerations

It’s better to a run a general survey or one with a slightly higher margin of error than you’d like than to run no survey at all. You’re going to have a budget for your project and you’ll have to work around it as best you can.

Test it!

We seriously can’t say this enough, but before you send your survey make sure you test it multiple times.

- Make sure your skip logic works correctly if you’re using it.

- Make sure that your answer options are randomized (unless you’re using a Likert question).

- Time how long your survey takes to complete. Longer than 10 minutes? Considering trimming out some of your questions.

05 Analysis

Finding and showcasing engaging data

Now comes the fun part. Your perfectly designed survey, based on a focused goal and sent to the exact right audience to answer it, has come back. Now all you’ve got to do is dive in. Sometimes it’s easy to pick the most compelling data points from your survey data, but often it won’t look exactly how you’d expected it would. Don’t worry! Even if it didn’t turn out exactly how you’d expect, there are a few tools and tricks that you can use to make the best of it.

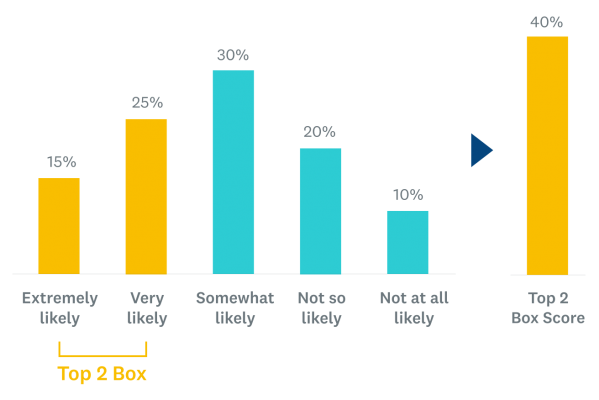

Create Top 2 box scores from your Likert scale questions

Likert scale questions may be the simplest question type in your survey, but the raw results aren’t always exactly what you’re looking for. They’re designed to give you focused results on a single subject, but when your responses are broken down into five answer options, the numbers you get back can feel a little unfocused. That where Top 2 box scores come in. Take a look at the example below.

Creating a Top 2 Box score is a market research tactic for getting more focused data out of your Likert questions. Instead of reporting 15% and 25% separately, which lacks impact and can be unwieldy to write about, you can add the top two or bottom two scores together. 40% is a much more impactful number and is much simpler to write about it. Don’t feel uncomfortable using this technique? Political pollsters—the survey creators who withstand by far the most scrutiny—do it all the time.

Use filters to find the stories under the surface

There’s no worse feeling than sending a survey, excitedly awaiting the results, and getting back data that’s ambiguous or inconclusive. We’ve all been there. There’s a normalizing effect that happens when you sample a large population that can take the fun out of your results. Don’t worry: Sometimes the most interesting story isn’t what most people said in your survey, but what certain groups said. Filters can help you cut the noise out of your results and listen to each group separately.

Filter by demographic

This is where your well-thought-out demographics questions start coming in handy. Filtering by demographic group is a great place to start looking for differences of opinion. It allows you to see how each demographic group answered your survey differently. For example, these results might look pretty inconclusive, but when you compare how men and women answered, clear differences emerge.

When filtering by demographic, it’s best to start broad and get more specific from there. Be careful to avoid non-sequiturs. While you may spot interesting differences between different groups, consider whether they have any actual relevance to the point you’re trying to make in your content.

Here are a few popular demographic groups to get started on:

- Gender

- Age group

- Income

- Job level

- Location

You can even stack filters on top of one another to get an even closer look. Be careful, though. Unless you’ve got a really large sample size, whittling away too much at the size of your groups will likely make your results lose their validity. Try not to draw large conclusions on groups below 100 respondents, and definitely avoid talking about groups smaller than 50.

Go beyond demographics

You can filter your results by any question your respondents answer—not just demographic questions. That adds a lot of flexibility to your filtering options. It allows you to make statements like “among people who say they click on ads online, 54% say they like happy ads the most” or “among those who said they like happy ads the most, just 22% said celebrity endorsements are most likely to catch their attention.”

Choosing the right type of visual

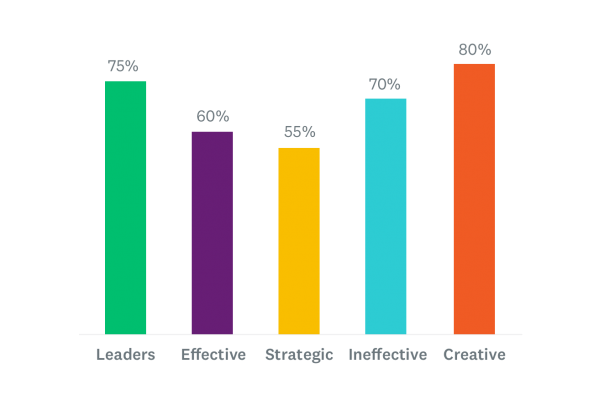

Remember in the survey-writing section of this guide when we talked about the differences between single-answer questions and multiple-answer questions? Here’s where you’ll really start seeing those differences. Although every case is different, you’ll often find that a graph of a multiple-answer question looks like this:

Notice there’s not a ton of variance between answer options and while there is a clear winner, it’s not very pronounced. When you give respondents the choice to select multiple answer options they often tend to “spread out” their responses across multiple answer options. Questions where this occurs usually don’t make very compelling visuals, but they do make great data points to call out. In the example above, saying “80% of people think marketers are creative” is a powerful statement. But a graph isn’t very useful in this context because including the rest of the answer options isn’t necessary to—and potentially may even work against—us making our point.

Now let’s take a look at a graph of the same question with only a single answer.

As you can see, there’s lot more variance between the answers. Most of the time, but not all the time, you’ll find this is the case with single-answer questions. That’s because you’re forcing respondents to choose just one answer option, which means they can’t “spread out” their responses as much.

Alone, the data point from the first section about how creative marketers are was much more compelling than the same answer option in this example. However, the graph in this example is much more accurate for comparing and contrasting the differences between answer options. A graph, like the one in this example, is the perfect tool for illustrating those differences.

The examples above are by no means rules. Multiple-answer questions can sometimes present compelling graphs and single-answer questions can often make really good data points. As a general rule, though, it’s best to use graphs to illustrate significant differences between answer options. Otherwise, they lose their power or may even confuse your readers.

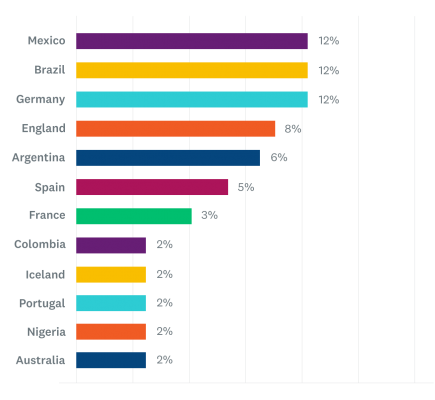

When to use horizontal graphs

Horizontal graphs are another option for illustrating your data. While bar graphs are most instructive when they show differences between answer options, horizontal graphs work best to show where answer options sit relative to one another. They’re also good for illustrating data from questions with a lot of answer options. Take, for example, this study SurveyMonkey did with ESPN to ask Americans who they’d root for in the 2018 World Cup.

With 12 teams to choose from (we’ve shortened this list from the entire 32-team-roster), a graph of this data would be overwhelming and nearly impossible to see where each team ranks relative to one another. Also, the size of the bar in a graph isn’t as instructive or important as where each answer option ranks relative to the rest of them. A graph like this serves much better.

Most survey software tools, like SurveyMonkey, will automatically present your data as graphs, but not every tool can switch between both. Priceonomics has great free tool if you want to create simple, functional tables that function basically the same way. For more creative visualizations, even non-designers can use Canva to create cool infographics.

Quick tips on visuals

Vertical bar graph

- Use vertical bar graphs when you want to show the difference between seven answer options—or less.

Horizontal bar graphs

- Use horizontal bar graphs when you want to illustrate the relationship between seven or more answer options.

Pie chart

- Use pie charts when showing a distribution of the pieces that make up the whole.

- Don’t use them to illustrate any more than 3 answer options.

Line graph

- Use line graphs to show how trends progress over time.

Any other fancy chart type

- Your readers probably aren’t data scientists, so we recommend sticking with the basics for content.

Considerations

- Get rid of the clutter. You don’t need numeric axis labels AND individual data point labels. You don’t need a legend when you only have 1 data series and the chart title will do it justice. A good rule of thumb is to include the least amount of information that makes a complete point.

- Rounding is your friend. Readers won’t hold you to a high standard for the decimal places you use. Plus 50% has a better ring to it than 49.51%.

Remember, none of these are hard rules. Presenting data as data points or in graphs, can change completely depending on the case. It’s up to you to decide the best way to present it so that it’s valuable and instructive to your readers.

06 Content creation

Putting your data into action

Now’s the moment you’ve been waiting for. Armed with a folder of informative charts and infographics, plus a hefty list of data points that fit together to tell a relevant and compelling story, you can begin creating your content.

If you’ve followed all the steps in this guide, this should be the easy part. As we mentioned in the planning section of this guide, there are three main directions to take, depending on your goals. Here’s how we recommend creating content for each of the three types of projects.

Writing a blog or article on your site

Even if you’re planning to pitch your data to media or turn it into a gated report, that doesn’t mean you can’t also write an article about the data to get some traffic to your site.

- If you’re pitching to media, make sure you publish your article AFTER the media organizations publish it. Remember, journalists love exclusives.

- If you’re creating a gated report with you data, make sure you don’t give away everything in your blog post. Instead, create a teaser blog post that provides just enough information on the topic that they get hungry for more.

The survey creation framework we used in the Design section of this guide can act as a basic outline for your article: You’ve got a thesis to focus on in your introduction and several supporting points that you can organize into the sections that will make up the body. Your survey results will fill out, explain, or argue for each of those sections.

There are a few areas we’ve found that separate a good article from a great one. Keep them in mind as you’re writing.

Do the preliminary legwork

Your article won’t be worth much if nobody ever reads it. Make sure you’re targeting a relevant, valuable SEO keyword using tools like ahrefs or Moz. Traffic from search is your best chance at getting your content in front of as many people as possible and to keep it relevant for as long as possible. Use a tool like BuzzSumo to see how other people have written about this topic in the past. What was successful? What wasn’t? Take an angle that’s unique and fits your data.

Write a headline you’d want to read

There’s an art to writing a good headline. A truly successful one will check all four of the following boxes:

- It’s enticing and clickable for your readers: It may seem pretty obvious to say that you shouldn’t write a boring headline, but you’d be surprised how often we seem them. Write a headline you’d want to click on. Make it surprising or controversial; focus on relevancy and timeliness; use zippy verbs and spicy adjectives.

- It includes a valuable SEO keyword: Traffic from search engines is indispensable. Including a relevant, valuable SEO keyword in your headline (hopefully in the first few words) is an absolute must.

- It isn’t too long: Headlines lose their punch and can cause ugly formatting issues if they’re too long. We like to shoot for 65 characters in ours.

- It suggests you have exclusive data: There’s a good chance that you’re writing about a topic that’s been written about a lot already. A headline that shows you’ve got exclusive data makes it stand out and lends extra legitimacy.

If all that seems overwhelming, here’s a useful trick: Use your most compelling data point or finding as a headline. Take these, for example:

56% of people don’t like online dating, but they do it anyway

Is it OK to use emojis at work? Here’s what our survey found

Each takes a single data point or idea from a survey and, with a little bit of massaging, accomplishes all four of the requirements for headlines we laid out above. This isn’t to say that they always make the best headline. But if you’re having trouble writing a good one, using a data point is a good tactic to fall back on.

Write an introduction that makes them stay

You’ve written a headline that’s made someone open your article. Nice work! Now you’ve got to make them stick around and actually read it. That’s your introduction’s job.

There are countless ways to approach writing an introduction, and we won’t go into them all. Just make sure it’s engaging enough to make people want to continue reading and seamlessly connects your headline to the body of your article.

It’s also a good idea to give readers some context about your study. You should briefly mention where you got your data and how many people you surveyed to get it. It will help readers understand why you’ve got authority to write about the subject, particularly if it’s not completely in your wheelhouse. It’s OK if it’s something simple:

To find out, we surveyed 500 marketers about the challenges they face getting the attention of today’s consumers.

57% of people say they pay a great deal or a lot of attention to the data collection method or source of an article they read.

Contrast your data with information that’s already out there

Depending on your topic, there’s a good chance that there’s some information on it already out there. It’s a good idea to do some research and find some points that you can compare and contrast with your data. Try to avoid citing other surveys, though; then it’s just a surveys version of “he said, she said.”

Instead look for quantifiable metrics that your survey data can add color and context to. A survey of real estate professionals means a lot more against the backdrop of data about the booming real estate market. On the other hand, writing solely about dry business performance metrics can be a drag—unless you add qualitative survey data from customers that help give voice to the reasons behind that performance.

Don’t overuse data points and charts

We get it. You’ve got a ton of cool data points and lots of really interesting graphs. Why not include them all in your article?

Your readers came to read an article, not a data readout. While the data itself is interesting, it’s your expert framing and explanation that makes it into a useful, engaging story. Be brutally selective about the data points you include in your writing.

In any paragraph of your article, you should use a maximum of one, sometimes two, sentences to deliver data points from your survey.

The rest should be explanation, context, contrasting data, or actionable information.

Treat your charts and graphs with a similar level of selectivity. When used correctly, they can do a lot to help explain your data and give your readers a much-needed break between thoughts. When you use too many, they’re repetitive, distracting, and overwhelming. Use your best judgment about how many charts to include. We find that including one chart or infographic every 400 words is about the right cadence for preserving the flow of your article.

43% of people say they prefer to see data presented as charts, graphs, or infographics.

Include a methodology statement

Methodology statements are there solely to explain the rigor behind your survey in order to make readers trust your findings. Here’s an example Netflix used:

The survey was conducted by SurveyMonkey from December 20-31, 2016 and based on 30,267 responses. The sample was balanced by age and gender and representative of an adult online population who watch TV shows via streaming services as a couple in The United States, Canada, UK, Australia, New Zealand, Philippines, Singapore, India, Japan, Taiwan, South Korea, Hong Kong, UAE, Mexico, Chile, Colombia, Brazil, Argentina, Spain, Portugal, Turkey, Poland, Italy, Germany, France, Sweden, Norway, The Netherlands, and Denmark.

Always include your methodology statement at the end of an article as a reference. There’s no need to provide all this information up front.

Making a PR pitch to media

As we mentioned in the planning section, getting journalists to write about your survey results can be a tricky business. Generally, they’re only interested in data that’s truly newsworthy or very relevant to the industry or niche they cover. Additionally, they won’t touch anything that’s too promotional or congratulatory of your business.

It’s still absolutely possible to get their attention. We’d even say your chances are pretty good—if you get interesting results, and present to them in a way that’s honest and makes them easy to write about.

70% of people say they’d be more likely to trust data presented on a news or media publication than on a company’s site.

No matter how you plan on pitching your results, it’s usually helpful to start by making a good data summary sheet. A data summary sheet is like the light version of a press release. If you decide to write a press release, your summary will be the outline. If you want to make personalized pitches to journalists (which we highly recommend), your summary sheet will be the resource you use to tailor each pitch to each journalist.

Here’s the rough outline of what we’d recommend goes in your summary:

Headline: The headline and subhead should feature your most provocative finding and explain why it’s important.

Introduction: Frame the essential argument or information in the intro, and aim for one to two very short paragraphs (200 words or fewer) that highlight the top 3-5 findings you really want to showcase. Use them to structure the rest of the announcement.

Main points: Create a section for each top finding that includes a short description of the finding, why it’s important, and how it’s relevant to the larger issue you’re studying. Below each main point, add bullet points with the most relevant or interesting data points you have that support it.

Key table or graph: If you have a single table or chart that does a really good job of representing your data, it’s a good idea to include it. It doesn’t have to be fancy, but it should be easy to read and reference.

A link to your full results: You don’t need your results to look pretty. A well-organized spreadsheet will do.

A detailed methodology statement: Cap your survey summary off with a methodology statement like the one we presented in the previous section.

Your summary puts everything important from your survey at your fingertips.

If you’re going to pitch individual journalists, you have an easy-to-reference sheet of the most important talking points. It’s simple enough for you to quickly scan for relevant data while on the phone and detailed enough that you can copy/paste the most relevant sections into an email.

Your summary will help you think about different angles that might suit different journalists. This is an area where demographic breakdowns can really come in handy. If you’re thinking about pitching to a journalist who writes to a mainly female audience, you might look at how women answered your survey. Age groups, political affiliations, and job roles are all areas to consider.

If you’ve got data that shows something important—a breakthrough or an interesting finding that you think is really news, it might be a good idea to create a press release. You can create one pretty easily by fleshing out the most important points of your summary and adding some optional, but helpful, additions.

- A quote from a third-party expert in the field you’re studying. It adds credibility and allows your expert to make commentary on your findings that’s useful but might not be appropriate for you, the objective researcher, to make yourself.

- A quote from a relevant high-level person at your company. The key word here is relevant, you don’t want a vice president of marketing commenting on a diversity and inclusion report, unless there’s good reason.

- A “cleaned-up,” easy-to-read chart or spreadsheet of all your data. This will let anyone who wants to dive in and pull out the pieces they think are most interesting.

Using survey data for lead generation

By “gating” your content with a form for collecting people’s contact information, you can gather a ton of leads for your salespeople to contact.

When you’re using survey data for lead generation, you’ve got to think bigger than a single asset. If you’re smart about how you set it up, a single survey can be the basis for an entire campaign. There are so many ways to approach using survey data as the backbone of a lead generation campaign, but for now, we’ll use our favorite method as an example.

Make a pillar to support your campaign

Make a single, comprehensive master asset about your study. This piece of content will be the gated asset at the core of your campaign, so it’s important to make it really good. Our favorite format for this type of project is a report-style asset, like the famous CMO survey. Reports like this are great because they’re highly visual and scannable, they can easily cover a lot of different areas of your subject, and they’re relatively easy to create. This report will be central to your campaign, but it certainly won’t be the only asset.

The beauty of doing the hard work of making a big, comprehensive asset up front is that you can repurpose the information in it as many time as you want to build out the content to support your entire campaign. Here are some ideas:

Build buzz

Create a sneak-peek infographic that presents one of your most surprising findings in a creative, visual way. Write social media posts and blog posts that use just enough of your data to tease your upcoming report.

Deliver

Use the same data points and findings to create compelling ads, emails, and field marketing collateral that drive traffic to your report.

Follow up

Once you’ve gotten some mileage out of your report, you can use it to start planning new gated assets. Select one of the most important areas of your report and use the findings as a basis for a webinar with a panel of experts. Create an eGuide that provides a deeper look at one section of your data and gives actionable information about it to your readers.

If the core asset in your campaign is good enough, the opportunities for upcycling the content in it are practically endless.

Make it evergreen

If you’re planning to build out an entire group of assets and give each enough time to shine on its own, you should probably plan ahead to make sure your topic and data will be relevant for at least a year.

Don’t underpromote

Don’t give up on an asset just because it didn’t get as much attention as you wanted the first time you promoted it. Try another angle, a slightly different audience, or simply choose a different timeframe.

Michele Linn, cofounder and head of strategy of Mantis Research, helps clients do this all the time. Here’s what she recommends:

“To help your research get even more authority and rank, decide on a ‘home base’ for your research (such as a blog post, landing page, or gated asset) and point all links there. Then, create spin-off stories and content from your findings. Think SlideShares, datagraphics, blog posts, infographics, webinars and more! To further expand the reach and amplification of your research, consider collaborating with another company or brand on the study-the data gets posted on both sites & promoted by both companies. When publishing a new piece of research, I also recommend tapping into your network to see where you can guest-post on other blogs. The benefit is that people will start to cite and link to YOU as the source of the data.”

Don’t skimp on quality

Your readers didn’t technically “pay” for this content, but it’s not exactly free either. Since they’ve invested something (their contact information) to read your content, it’s important you make sure that investment pays off. Your asset has to deliver actually important or useful information. The production value should be high. Your readers should be glad they downloaded it. Otherwise, you’ll lose their trust and erode the effectiveness of your next campaign.

33% of people said the information they got from gated assets was ‘very valuable.’

Offer first access to your respondents

Show your survey respondents some love for being your most important asset and the foundation of your campaign. They’ll appreciate the sentiment of giving them first access to your flashy new content. As the subjects of your study, they’ll be intrinsically interested in your results and chance are, they’ll even be good potential leads.

Tying it all together

If there’s one thing we don’t want, it’s that you feel limited by the instructions in this guide. If you want to try several of these tactics together, or pick and choose the ones you think apply best to your situation, then more power to ya.

The information we’ve presented here are guidelines based on our own experiences, which we only got through a lot of trial and error. It’s not our goal to make you do things exactly they way we do. It’s our hope that you feel confident enough creating your own surveys that you feel comfortable using them to find data to write about anything.

We really like surveys, and we like to see good content based off of them even more. If you use sound survey fundamentals and responsible reporting methods to make smart, interesting content, then you can win over the most skeptical media consumers and persuade your dream customers to give you a try.

Happy surveying!

Get started on your content marketing survey today

Design your survey

Create your own survey or use one of our survey templates. You’ll be off and running in no time.

Find your audience

Reach consumers in over 130 countries with SurveyMonkey Audience to get real-time feedback on your ideas.

Discover more resources

Understand your target market to fuel explosive brand growth

Brand marketing managers can use this toolkit to understand your target audience, grow your brand, and prove ROI.

Marketing trends: 3 strategies to stay relevant and ready for what's next

New SurveyMonkey research reveals critical insights on the state of marketing. Discover marketing trends to fuel your strategies and stay relevant.

8 marketing trends in 2025: consumer perspectives on AI and social media

Get insights on how AI and social media marketing activities impact consumer buying decisions in our new marketing trends report

State of Surveys: Top trends and best practices for 2025

Watch this webinar to explore 2025 survey trends, mobile insights, and best practices for optimizing your survey strategy.