Survey best practices

Learn how to design a great survey, from planning to asking the right questions.

People get surveys all the time. Why should they take yours? And how do you know if their answers are accurate and thoughtful?

You can’t guarantee that everyone who gets your survey will take it seriously (or take it at all). But you can minimize bias and increase your response rate with survey best practices.

What are survey design best practices? They’re guidelines for optimizing a survey for better engagement and reliable results. In this guide, you’ll learn how to ask great questions, fix common survey errors, and make your survey shine.

CHAPTER 1: SURVEY BEST PRACTICES OVERVIEW

Why survey best practices matter

Simply put, you conduct surveys to make informed decisions. For example, you might collect event feedback from people who attended your recent conference. Did they find the speakers engaging? Did they like the food? Have a good overall experience? Their feedback can then inform planning for your next event, ensuring that it will meet their preferences and expectations.

Yet the answers you get are only as strong as your survey. If your survey is unorganized, hard to read, or has many errors, you might see “satisficing.” Satisficing is when people don’t put effort into answering your survey, skewing your data.

You can make your surveys more engaging and show you’re respectful of respondents’ time by following best practices for offline and online survey design.

CHAPTER 2: PLAN YOUR SURVEY

How to design a survey: Best practices for survey writing

Set a goal

Let’s say you want to use surveys to conduct market research, like measuring brand awareness to inform your advertising. You might be tempted to ask lots of questions. The more data, the better. Right?

The problem is, if your survey is a lot of work to fill out, people might be less inclined to complete it. Plus, if your survey seems unfocused or poorly organized, people might lose trust in you and abandon your survey completely.

What are best practices for survey design? Before you start writing survey questions, it’s important to set a goal for your survey.

To do this, ask yourself: What am I trying to learn or measure? Who should take my survey? What do I want to do with their answers?

You might end up with a survey goal such as:

To improve our advertising, I want to survey people aged 25 to 34 to see how familiar they are with our brand.

This will help you stay focused and plan your survey design, from questions to survey question type.

Plan your survey design

Once you have a goal, you can get a better sense of the questions you need to ask. Your survey design plan should include objectives that go back to your goal. If your goal is to measure brand awareness within your target age demographic, you might have objectives to:

- See if people recall your latest social media ad campaign

- Measure how familiar people are with your brand

- Understand brand familiarity by key demographics (e.g., gender, location)

Remember to limit your number of objectives—these will help you choose which questions to ask. And if you have too many objectives, you’ll probably have too many questions for one survey.

Design with your data in mind

You have a goal and objectives. Before crafting your survey questions, define what type of data you need to achieve those objectives. Determining whether you require qualitative or quantitative data will shape your entire question design process and ensure you gather information that effectively supports your goals.

Here’s an example of a qualitative survey question:

When you think of this product category, what brands come to mind?

You’re asking people to recall brand names on their own. What you’re collecting is more difficult to analyze, but it also may give you a better understanding of whether or not your brand is top of mind.

The insights you get will be different from those you’ll get from a quantitative survey question, or closed-ended question, which asks people to choose from a list of defined options. For example:

Which of the following brands have you heard of? (Select all that apply.)

- Voltara

- Snuck

- Automogo

- Asher Health

- I haven’t heard of these brands

The quantitative question takes much less effort to answer. But the data may not be as illuminating. That said, it might be a bit easier to analyze quantitative data, which yield raw numbers and percentages.

It’s up to you to determine the balance of closed and open-ended questions in your survey. Here’s what we generally recommend for open-ended surveys:

- Limit yourself to two open-ended questions

- Ask a series of closed-ended questions, then include a single textbox question to capture any other feedback

- Put open-ended questions on a separate page towards the end of your survey

- Make sure that open-ended questions are optional

SurveyMonkey 101: Building your first survey

Join our free webinar and learn survey design fundamentals, AI-powered creation, and collaboration best practices.

CHAPTER 3: WRITE GOOD SURVEY QUESTIONS

Survey question best practices

If your survey questions confuse, mislead, or offend your audience, you might not get accurate answers. Or any answers at all. Here are a few tips for writing great survey questions.

Be clear and concise

- Avoid jargon, technical language, or acronyms. Especially if your audience is supposed to reflect the general population.

- Keep your questions as short as possible. People will be less willing to read long questions and may misunderstand what you’re asking.

- If your question has special instructions, add them (in parentheses). Here are a few examples:

Rank the following products from your favorite (1) to your least favorite (5).

Which of the following words would you use to describe our product? (Select all that apply.)

Which factors most influenced your recent purchase? (Select up to 3.)

Avoid survey question bias

As a researcher or survey creator, you have goals or hypotheses in mind. Unfortunately, it’s common for researcher bias to creep into surveys. Here are some of the most common types of survey question bias and how to avoid them.

Leading question example

A leading question is written in a way that influences survey responses. For example:

At Voltara, we take pride in our product quality. How satisfied are you with your most recent product purchase?

The first part of this question might affect how the respondent views their experience, leading them to answer more favorably.

A better way to ask this question would be to leave out the first sentence altogether and make sure to give people a range of answer options from “Not at all satisfied” to “Extremely satisfied.”

Loaded question example

A loaded question assumes something about the respondents that might not be true. The following example of a loaded question isn’t necessarily a problem:

Which factors influenced your most recent purchase? (Select all that apply.)

But if someone who didn’t make a recent purchase is forced to answer the question, their answer will be inaccurate, skewing your data.

Double-barreled question example

A double-barreled question asks people to give only one answer to two different questions. Here’s an example:

How satisfied are you with the price and quality of our product?

- Very satisfied

- Somewhat satisfied

- Neither satisfied nor dissatisfied

- Somewhat dissatisfied

- Very dissatisfied

If someone chooses “satisfied,” what are they responding to? What if they’re happy with the quality but not the price? It will be challenging to understand from their answer.

Avoiding absolutes

Absolutes use words like “every,” “always,” “all,” in the question prompt. These might make the respondent agree with a strongly worded question without allowing for more nuanced opinions. Here’s an example:

Do you always make purchases online?

- Yes

- No

Your respondents might make online purchases most of the time, half of the time, or on occasion. The absolute nature of this question, including the yes/no answer options, won’t provide useful data.

Sensitive survey questions

What’s a sensitive survey question? It depends on who you ask. Generally, questions about religion or faith, ethnicity, race, gender, age, sexual orientation, and income are considered sensitive. And you need to ask these questions in the right way or risk losing your audience.

Here are a few tips for asking sensitive survey questions:

- When asking about income, provide ranges to choose from (e.g. $31,000-$60,000) so people don’t have to give an exact number

- Provide a “Prefer to self-describe” option with an open textbox when asking questions about gender identity and sexual orientation

- When asking questions about ethnicity or race, explain how the data will be used

- Ask sensitive questions towards the end of your survey and try to make it optional for people to respond

Choose the right question type

When you planned your survey, you determined the type of data or insights you need. For example, closed-ended and open-ended questions will give you different insights. Plus, there are lots of different survey question types, from sliders to dropdowns that you’ll need to consider.

Pairing closed-ended and open-ended questions

Yes, you should generally limit the number of open-ended questions on a survey. But a well-placed open textbox question can give a lot of meaning to your quantitative data.

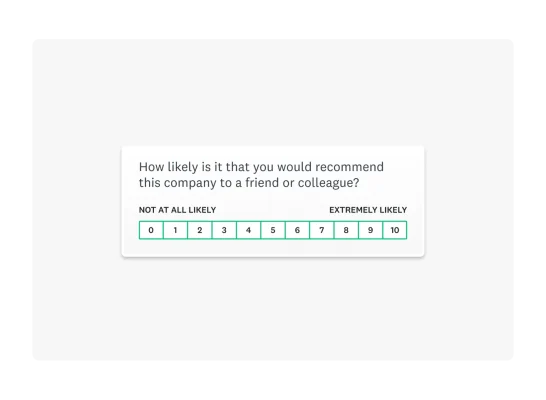

Take a look at the Net PromoterⓇ Score (NPS) question, which asks someone how likely it is that they’d recommend a company to others. NPS is one of the leading metrics companies use across industries to measure customer loyalty.

People are asked to choose from 0 (not at all likely) to 10 (extremely likely). The result is one number between -100 and +100 which companies can track over time and compare to NPS industry benchmarks.

But a number can only tell you so much. That’s why it’s a good idea to ask people to explain their score with an open-ended question.

It will take more time to dig into the resulting qualitative data. But you can gain important insights or the “why” behind your numbers by taking a look.

For example, maybe people who give you lower ratings mention a negative interaction with customer service, while those with more positive ratings are happy with your product quality. Now you know where to focus your improvements.

Multiple choice questions

There are many types of multiple choice questions you can use for your survey. Here’s a quick overview of some multiple choice question types, including what to consider.

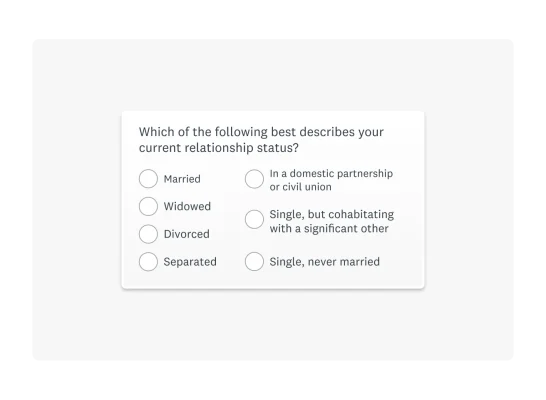

A multiple choice question can be as simple as asking someone to choose one option from a list. You might ask a demographic question like, “Which of the following best describes your current relationship status?” In this instance, you’d likely only allow someone to choose one answer option.

You can also allow someone to choose multiple answer options by enabling checkboxes. In that case, you’ll want to let people know that they can select more than one answer (e.g. “Select all that apply”).

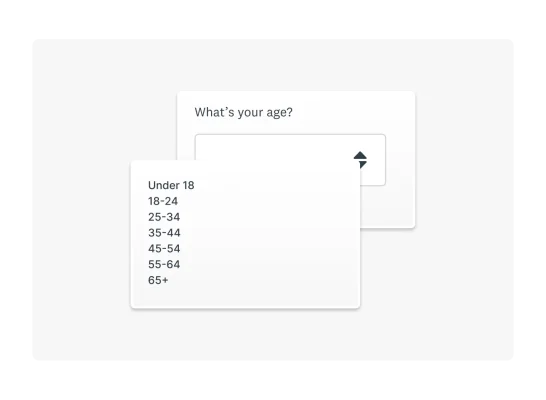

Other formats, like dropdown questions, can be helpful if you’ve got lots of answer options but don’t want to overwhelm your respondents. For example, an age dropdown question can be much easier to read and use on a mobile device.

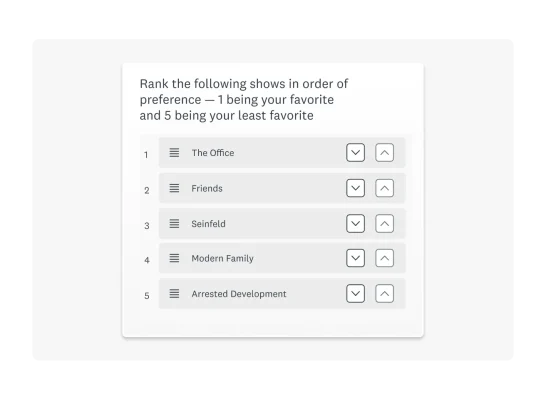

You can also use the ranking question type, which allows someone to rearrange answer choices in their order of preference.

Remember that ranking questions don’t indicate how much or how little someone likes an item. For example, someone might love the television shows “The Office” and “Friends,” and rank them one and two in a list of five options.

But they may feel neutral or really dislike the other shows on the list. All you know is that they ranked “The Office” first and “Arrested Development” last. Make sure to review the pros and cons of ranking questions before you use them in a survey.

Use matrix questions carefully

Sometimes, it makes sense to format your question as a grid, or matrix survey question. For example, you might ask someone to rate how satisfied or dissatisfied they are with five aspects of your customer service.

Instead of asking someone five separate survey questions, you can have them respond to different statements in one survey question.

Of course, there are pros and cons to matrix questions. Matrix questions are susceptible to straightlining, which is when people choose the same response for each question without taking the time to consider their answers. Here are a few tips for writing matrix questions to keep in mind:

- More and more people are taking surveys on mobile devices like phones and tablets. A matrix question can be extremely difficult to fill out on a mobile device, so consider your audience and choose your question type accordingly.

- Keep response options as brief as possible. The more words, the more likely you are to cause formatting or readability issues.

- We generally advise that you use five or fewer response options and items in a grid. That way, you’re not overwhelming respondents.

Are your surveys great, or are they suffering from these 5 most common mistakes? Read our comprehensive guide to find out.

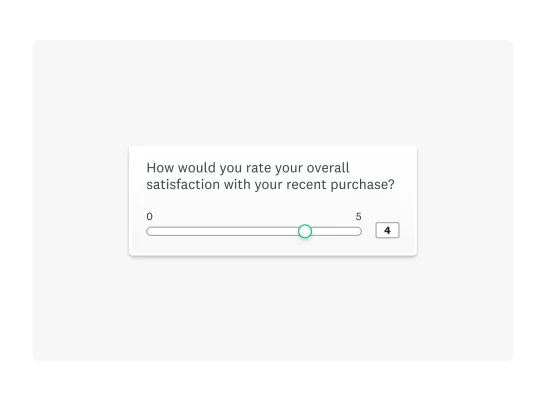

Survey rating scales best practices

Once you’ve figured out which types of survey questions you want to ask, you need to think about how you want people to answer.

For example, when it comes to multiple choice questions, how do you want people to rate their satisfaction? They could choose from 1 to 10, select a smiley face, rate you with a number of stars. Or you could ask them to choose from a worded list, from very satisfied to very dissatisfied.

Worded vs numbered lists

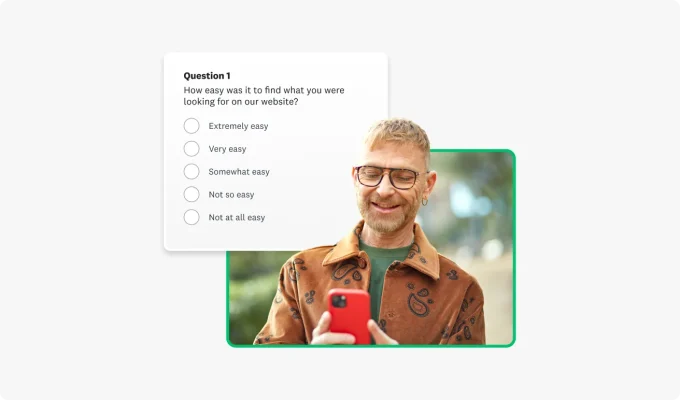

Let’s say you show a website feedback survey to people who just purchased from you online. You want to understand how easy it was for them to find what they were looking for on your site.

Now you’ve got some options. Do you ask them to rate their experience from 1 (not at all easy) to 5 (extremely easy)? Or do you remove numbers altogether and give them a list of worded answer options:

When it comes to numbered vs worded lists, here’s what to consider:

- Context: When do people see your survey? Someone who just made a purchase from you might not have more time to read through a bunch of answer options. A number slider bar or star rating might work better for someone short on time or patience.

- User-friendliness: If someone’s on a mobile device, a worded list might not be easy to read or choose from. Also, words in one language might not be as universally understood as a numeric rating scale or symbol.

- Insights: Numbers can be subjective and less insightful than someone who chooses a clearly worded answer option. For example, saying your app’s average ease of use is 3.5 might not be as useful as saying “55% of people say our app is “somewhat easy” to use.”

Numbering your rating scales

Whether you go for words, numbers, or even symbols, you have to pick the right number of answer options for your survey. Here’s how.

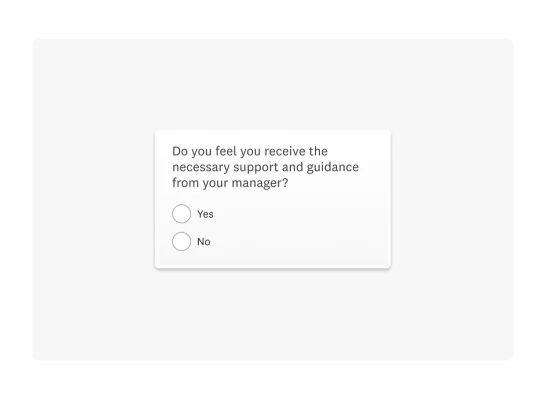

Yes/no and agree/disagree explained

Sometimes, for the sake of brevity and clarity, you might use yes/no or agree/disagree survey questions. When you give someone only two options to choose from, they have to take a stance.

For example, if you ask an employee to answer yes or no to a series of statements about their experience, it might be easy to analyze the data.

But two answer options removes the possibility of a neutral answer option or any sort of nuance. How many degrees of “yes” or “yes, but” could get lost when someone has to choose from only two answer options? Think about your survey goal, then decide if two answer options will get you the most helpful insights.

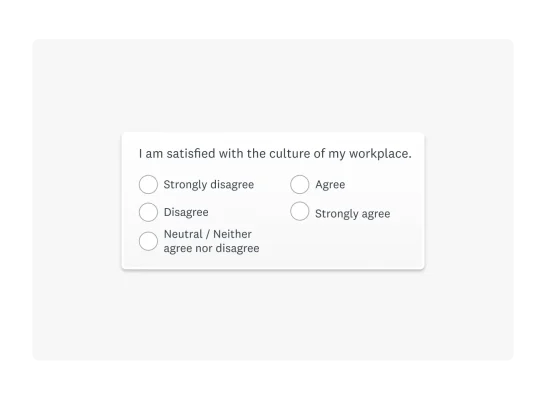

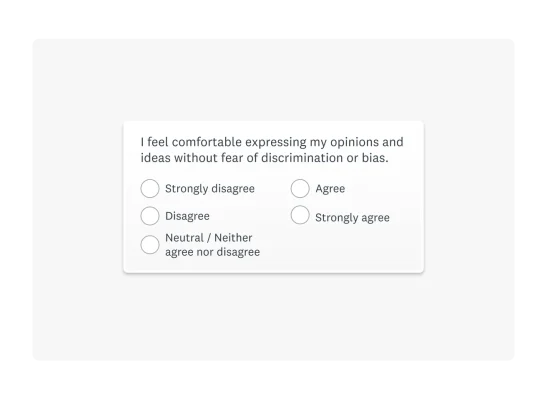

5- and 7-point scales

For more nuance, you should provide more than two answer options. The Likert scale is one of the most widely-used methods for measuring feelings, behaviors, or opinions on a scale.

Not all rating scales include a neutral answer option like “Neither agree nor disagree.” But if you’re going to provide a neutral option, it’s generally accepted that 5- and 7-point scales are a good way to do it.

Of course, it’s up to you how useful different degrees are. The five answer options for the question, “I am satisfied with the culture of my workplace,” might be enough. If you needed a more granular breakdown of employee sentiment, you might provide even more answer options.

CHAPTER 4: KEEP IT SHORT AND RELEVANT

Survey length best practices

You have lots of questions, and it might be tempting to ask as many as you can in a survey. But covering too many topics and asking too much of your respondents may cause them to abandon your survey or rush through your survey, giving you inaccurate answers.

In fact, our recent research shows that surveys are getting shorter on average, with 53% of surveys containing five or fewer questions per page.

Of course, context matters. If you’re running a mandatory, yearly employee self-evaluation, you can worry less about survey length and more about ensuring the questions are clear and unbiased.

Tips for keeping surveys short and relevant

- When it comes to survey length, keep your surveys as short as possible. We recommend aiming for a one-page survey with 10 or fewer questions.

- Use screening questions to qualify or disqualify respondents from your survey. You wouldn’t want someone answering questions about how they use your product if they don’t use it at all.

- Don’t try to answer all of your questions at once. For certain types of surveys, like market research or customer feedback, you can ask someone permission to contact them with follow up questions.

- Use survey logic to skip respondents over sections of your survey that don’t apply to them.

Question order best practices

Question order matters because it primes your survey respondents. Priming is when you influence or prepare someone to answer questions a certain way. Here are some question order best practices:

- You can randomize your survey questions to reduce your chances of bias. Your questions will be presented in a random order to respondents.

- Ask open-ended questions towards the end of your survey. That’s because open-ended questions take more time and effort to answer. You might deter people from taking your survey if they have to write out their answers right away.

- Along the lines of question order, be careful about requiring answers to questions. Only require answers to questions that are mandatory for helping you meet your survey goal.

- If you’re screening people out, you should require that question and put it first. If you have important information that you want to collect, consider requiring some of that information later, after people have filled out most of the survey.

- Ask questions about sensitive subjects later in your survey, after you’ve built rapport and trust with your audience.

CHAPTER 5: INCENTIVES

Survey incentives best practices

Many times, people take your survey because they want to, not because they have to. For example, you send a patient satisfaction survey to people who recently got treatment at your medical center.

Patients don’t need to tell you about their experience. But with the right survey introduction, you could compel them to give you feedback because it’ll help you make improvements that are important to them.

In other instances, like employee surveys or training surveys, people are required to respond. But if you’re running market research or trying to reach a particular demographic, you’re asking respondents to do you a favor. That’s where incentives come in.

A survey incentive is what you offer someone to take your survey. It could be as simple as a small gift card for their time or entry into a raffle. Here’s what to consider:

- You can use incentives to improve response rates. For example, research has shown that offering cash to respondents is one of the most effective incentives. But you have to choose an amount based on your audience. You could offer higher dollar amounts to experts in smaller studies, and smaller amounts to a more general population.

- Think about how you’ll offer incentives without sacrificing good data. Just because people take your survey doesn’t mean they’re giving you useful or truthful answers.

- Research has shown that prepaid incentives are more effective in increasing response rates than compensating people after the fact. But you might have to rely on different types of incentives to see which work best. Different academic research studies have shown different relationships between incentives and response rates.

CHAPTER 6: FINALIZING YOUR SURVEY

Before you send your survey, you want to make sure it’s polished, professional, and accurate. Otherwise people might think it’s suspicious or have trouble submitting their answers. Here are some tips for getting it right before you send.

Add your branding and customize

You might want to add your brand or logo depending on the type of survey you’re running. For example, if you’re surveying customers about a recent purchase, a visually striking survey might capture their attention and be more engaging.

You can also embed your survey into your email or website, making it easy for people to take it–and giving it a professional look. Here are a few other tips for improving the visual design of your survey:

- Fonts like Arial, Helvetica, and Verdana are good choices because they’re easy to read.

- Make sure there's enough contrast between your font and background color. Darker text on a lighter background is better for accessibility, too.

- Let people know how long your survey will take, and break your survey up into pages so people don’t have to scroll as much–especially on a mobile device.

Edit and refine your survey

Once you polish your survey design, it’s time to review and proofread it. Here’s how:

- You can preview your survey before you send it out. After you design a survey with SurveyMonkey, choose Preview survey. This lets you see the survey as a respondent would, and you can take it too. Make sure to test all of your survey logic, and see how your survey works on different devices, like desktop or mobile.

- Use AI-powered SurveyMonkey Genius to detect any errors in your survey. It’ll make suggestions on how you can boost your completion rate and even estimate how long it’ll take people to fill it out.

- Emailing people your survey? Send yourself a test email to make sure everything works.

- Collaborate with your team on the survey. Ask them to review your work, preview the survey, and make any necessary changes all in one place.

CHAPTER 7: QUICK START SURVEY CHECKLIST

Cheat sheet: 10 best practices for survey design

Don’t have time to read up on all of our survey best practices? Here are 10 ways to make sure you get reliable results from your survey or online form design.

1. Set a survey goal

Answer these questions before you start your survey: Why are you running this survey? Who are you sending it to? What are you going to do with the results? This will keep your survey focused and actionable.

2. Be clear and concise

Long survey questions? Break them up into shorter sentences. Use straightforward, simple language. Avoid jargon, technical language, and acronyms for general audiences.

Include any special instructions. For example, if you want respondents to choose multiple answers, say, “Select all that apply.”

3. Keep it short

We recommend sending a one-page survey with 10 or fewer questions. Your survey participants will thank you (and probably give you more useful feedback).

4. Limit textbox questions

Most of your questions should be closed-ended, meaning people can choose from a list of answers. Limit your survey to one or two open-ended (textbox) questions.

5. Check for bias

Take a look at your question language. Are you leading someone to answer in a certain way? Are you assuming something about your survey participants that might not be true? If you’re not sure, ask someone with a neutral perspective to review your survey before you send it out.

You can also enable question, page, and answer order randomization in SurveyMonkey (if applicable to your survey).

6. Review question accuracy

Avoid double-barreled questions, which ask for feedback on two topics in one question. And make sure your answer choices don’t overlap, which will skew your data.

7. Be sensitive

Sometimes you need to collect demographic information, like age or gender identity. Or you need to ask questions about sensitive topics. When you’re not sure how to ask, rely on pre-written sensitive questions designed by research experts.

If you collect health information, enable HIPAA-compliance and let people know their information is protected.

8. Give context

In most cases, when you send your survey, let people know:

- Who you are

- Why you’re sending them a survey

- How you’re going to use their information or feedback

- If you’re going to follow up, and how that’ll work

9. Use incentives (wisely)

If you need lots of survey responses, consider using incentives. This is especially helpful for market research or customer feedback, where people might not be as motivated to respond. Popular incentives include gift cards, sweepstakes entries, or promised donations.

10. Preview and test your survey

Survey ready to go? Preview your survey, taking it as if you were a survey respondent. Look out for survey writing errors, logic issues, inconsistent rating scales, or basically anything that’ll hurt your data accuracy.

It’s easy to overlook mistakes and bias in your own surveys. Share your survey with others so they can test it out too.

CHAPTER 8: CUSTOMER SURVEY DESIGN

Customer feedback survey best practices

The customer feedback survey is one of the most popular survey types. Are you asking the right questions? Check out these top 5 questions you should ask customers. And get to know how to run an effective customer service survey.

Customer experience (CX) survey best practices

Think about every single interaction a customer, or potential customer, has with your brand: Advertising, website purchases, customer service, loyalty programs, and more. Then, make targeted improvements to your customer experience strategy by collecting customer feedback at those key touchpoints.

Before you write a survey, check out customizable survey templates that are designed to get you reliable data. This customer experience survey template will help you learn more about your customers and track customer sentiment.

NPS survey best practices

Net Promoter Score (NPS) surveys are a versatile way to track customer loyalty across the customer journey. Here’s how.

- Read this NPS survey question guide for examples of using NPS to calculate and track your customer loyalty across channels.

- Check these 10 tips for building stellar NPS surveys. Learn to personalize surveys, get your timing right, and ask effective follow-up questions.

- Follow these best practices to increase NPS response rates. Tips include how to write a compelling email invitation and how to build trust with your respondents.

Customer satisfaction (CSAT) survey best practices

Want to improve your customer satisfaction surveys? Check out this comprehensive guide on customer satisfaction survey best practices. Here are some other helpful resources:

- How to write customer satisfaction survey questions that’ll get you actionable data.

- No time to write? See 20 customer loyalty survey question examples.

- Need feedback now? Customize this customer satisfaction survey template to get answers today.

CHAPTER 9: EMPLOYEE SURVEY DESIGN

Employee survey best practices

Companies and institutions of every size should care about how their employees feel. Check out the different types of employee surveys designed to measure and improve all aspects of the employee experience.

For example, regularly run employee satisfaction surveys to measure employee happiness. To collect candid feedback from your employees, enable anonymous responses. You may also want to let employees know that the data will be viewed in aggregate and that individual responses can’t identify them.

Candidate experience survey best practices

Make sure you’re providing a great candidate experience to attract top talent and boost your employer brand. Send a candidate experience survey to anyone who interviews at your company or interacts with your recruiters. Ask questions about the effectiveness of your communication, fairness of the interview process, and more.

Employee engagement survey best practices

How enthusiastic and committed are your employees? The answers have a direct impact on your overall employee satisfaction and loyalty. Here are some tips for using employee engagement surveys:

- Show that you’re invested in employee development by asking about desired training, skillset gaps, and how you can support employee growth.

- Measure and track employee loyalty by customizing this employee Net Promoter Score (eNPS) survey template. Check out these top 20 employee survey questions you can add to any eNPS survey.

- Conduct regular employee self evaluations to measure employee performance and understand how motivated employees are to do good work.

Pulse survey best practices

Organizations use pulse surveys to get real-time insights from employees. These are different from more formal, regularly scheduled employee surveys like performance reviews. Here’s how they work:

- Customize and send this employee pulse survey template at any time for a snapshot of employee sentiment following a big event.

- Regularly monitor how employees feel about your company culture and work environment.

- See if your Diversity, Equity, and Inclusion (DEI) efforts are working. For example, include gender equality survey questions in a pulse survey.

Exit survey best practices

When an employee leaves your company, take the opportunity to ask for their feedback. Send them an exit interview survey to find out about their experience and where you could improve.

Only survey people who are leaving your company voluntarily. (Use a separate process for those who are laid off or let go.) And make sure to ensure their confidentiality so they feel comfortable giving honest feedback.

CHAPTER 10: RESOURCES

Designing for every audience

Whether you’re writing a survey or questionnaire, consider your audience. As you can see from these survey best practices, there’s not one right way to run a survey. For example, student survey questions will be different from market research surveys. Here are a few more survey best practices and resources by survey type.

Related reading: How to find survey respondents in 5 easy steps

UX survey best practices

How easy is it for people to navigate your product? What’s their experience like? What problems are they having? What do they wish they could change? Will your new product or service meet their needs?

These are just a few questions you can answer with a user experience (UX) survey. If you’re looking to generate new ideas, you can ask more open-ended questions. But if you’re testing a product, give respondents more closed-ended questions that’ll help you make a final decision.

Donor survey best practices

If you’re in charge of fundraising or run a nonprofit, use nonprofit surveys to boost your donations. Here are a few tips:

- Collect donor feedback to improve their experience and encourage future donations

- Recruit more volunteers and stay organized with online volunteer applications

- Use a nonprofit donation form to easily collect online donations and contributions

Easy ways to follow survey best practices

- Once you have your survey goal, use our Build with AI feature to automatically create your survey. Customize the survey or use it as-is. Writing your own survey? You can also rely on Answer Genius, which suggests relevant answer choices and phrasing based on your questions.

- Save time on survey design. Choose from more than 400 customizable survey templates and send your survey today. You can also design your survey using pre-written survey questions from our Question Bank. Search for what you need and drag and drop questions into your survey design.

- Rely on SurveyMonkey expertise. Our professional services team can help you design a survey that meets your unique needs.

Explore more survey best practices articles

We analyzed the data collected on our platform. See what’s trending in 2024.

Employee productivity measures how your employees add to your business. Calculate and improve productivity rates.

Get to know 4 survey pitfalls that can seriously impact your results.

See examples and get expert advice on how to ask the right questions.

NPS, Net Promoter & Net Promoter Score are registered trademarks of Satmetrix Systems, Inc., Bain & Company and Fred Reichheld.

Discover more resources

Explore our toolkits

Discover our toolkits, designed to help you leverage feedback in your role or industry.

What's new at SurveyMonkey?

See live demos of all SurveyMonkey's newest feature releases.

Survey templates

Explore 400+ expertly written, customizable survey templates. Create and send engaging surveys fast with SurveyMonkey.

Future of surveys: What our platform says about 2025

New proprietary data on survey trends, the growth in mobile surveys and why it signals new habits, new users, and better ways of collecting data.